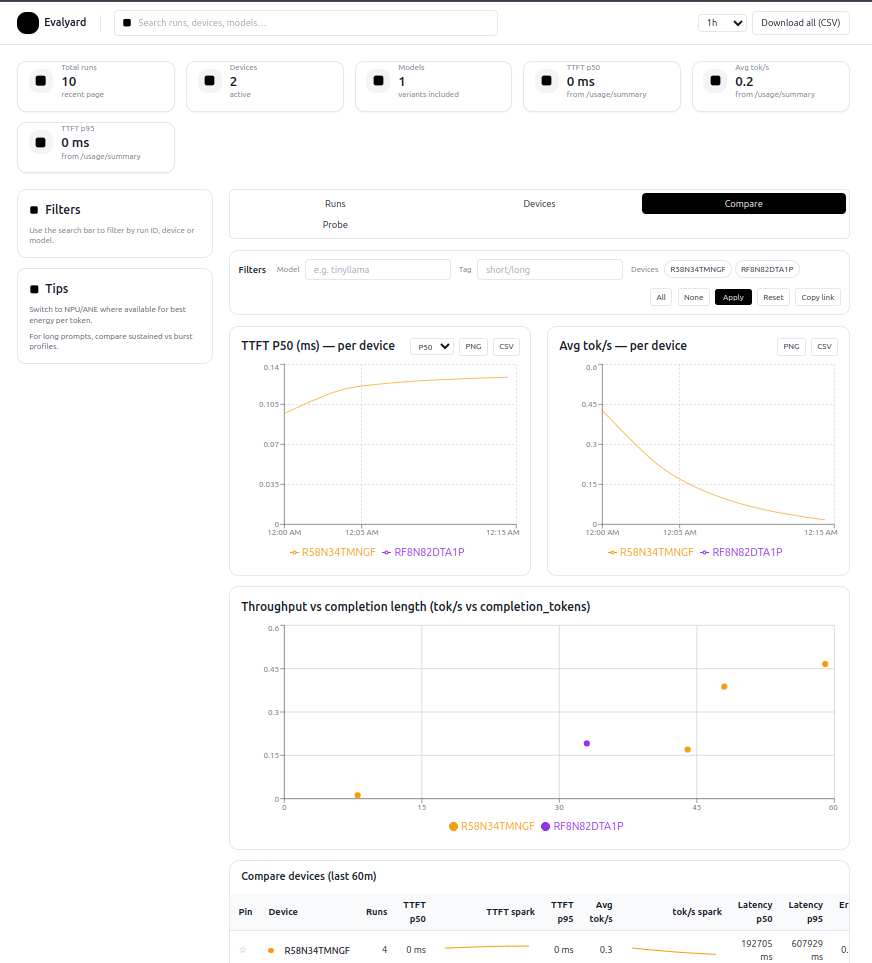

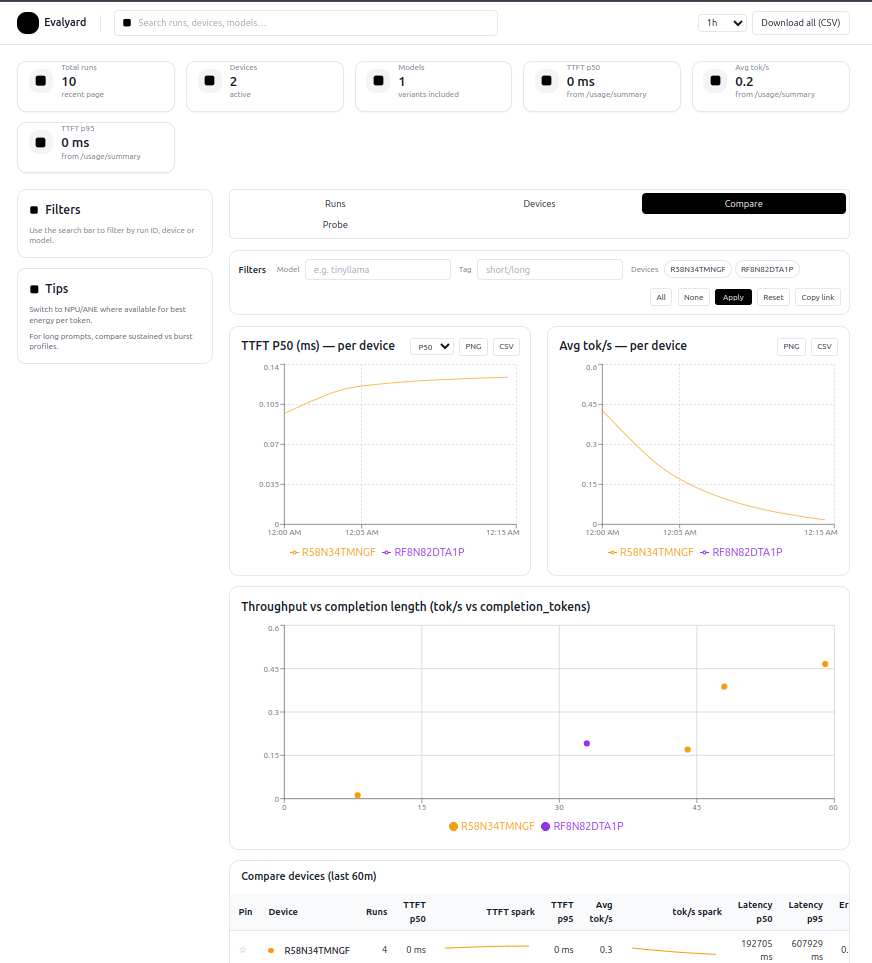

Benchmark & route LLMs on real Android devices

Evalyard is a hosted dashboard + a real Android device lab — TTFT, tokens/sec, P50/P95, throttling, temperature, and battery metrics.

Self-service dashboard is coming soon.

Try the demo

Evalyard is a hosted dashboard + a real Android device lab — TTFT, tokens/sec, P50/P95, throttling, temperature, and battery metrics.

Self-service dashboard is coming soon.

Stream your own metrics into Evalyard, then add real devices when you need them.

Bring your metrics

Latency, quality, power — sent via API.

Unified bench view

API metrics and device runs in one UI.

Real devices layer

Attach phones for TTFT & on-device perf.

Explore & export

Slice, share, export CSVs & snapshots.

Run on real Android devices – or plug in your own metrics via API.

Use our Android lab or your own phones for real-device TTFT & throughput.

Stream latency / quality logs into Evalyard and reuse the same dashboards.

We can provision specific phones, build adapters, and share a read-only dashboard for your team. No spam.

Need fully isolated infrastructure or shipped devices? Ask about Enterprise Fabric.